bagging machine learning explained

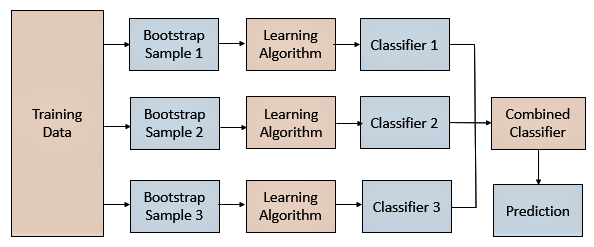

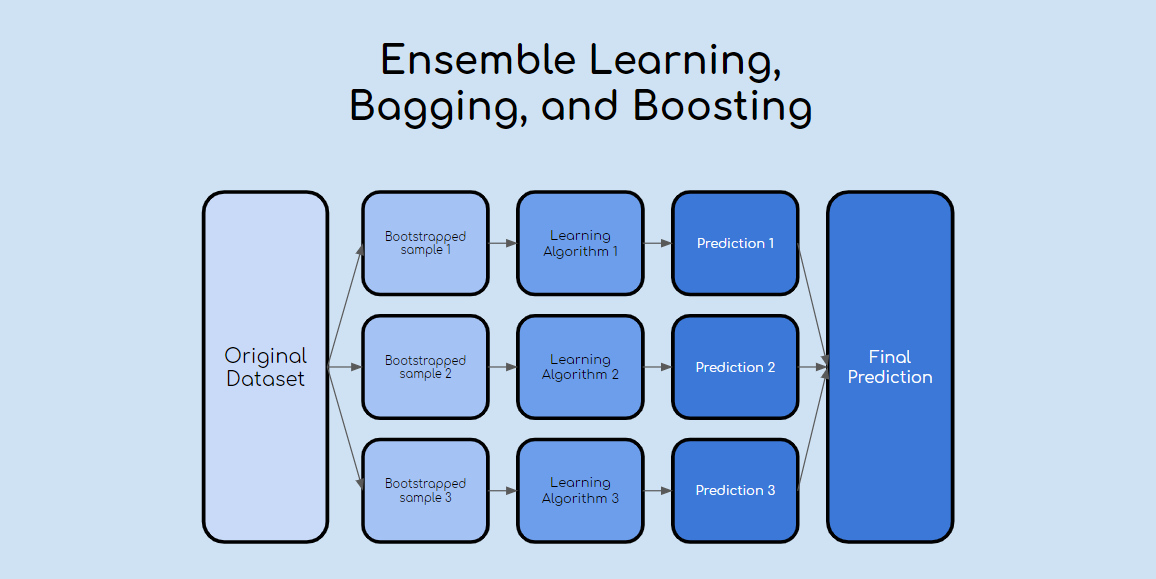

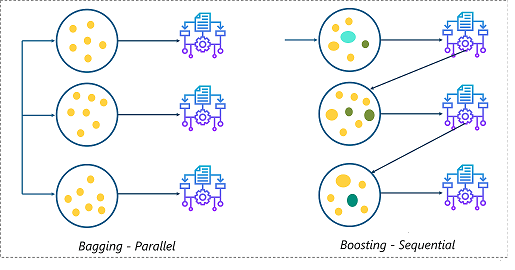

Bagging a Parallel ensemble method stands for Bootstrap Aggregating is a way to decrease the variance of the prediction model by generating additional data in the training stage. CS 2750 Machine Learning CS 2750 Machine Learning Lecture 23 Milos Hauskrecht miloscspittedu 5329 Sennott Square Ensemble methods.

Ensemble Learning Bagging And Boosting By Jinde Shubham Becoming Human Artificial Intelligence Magazine

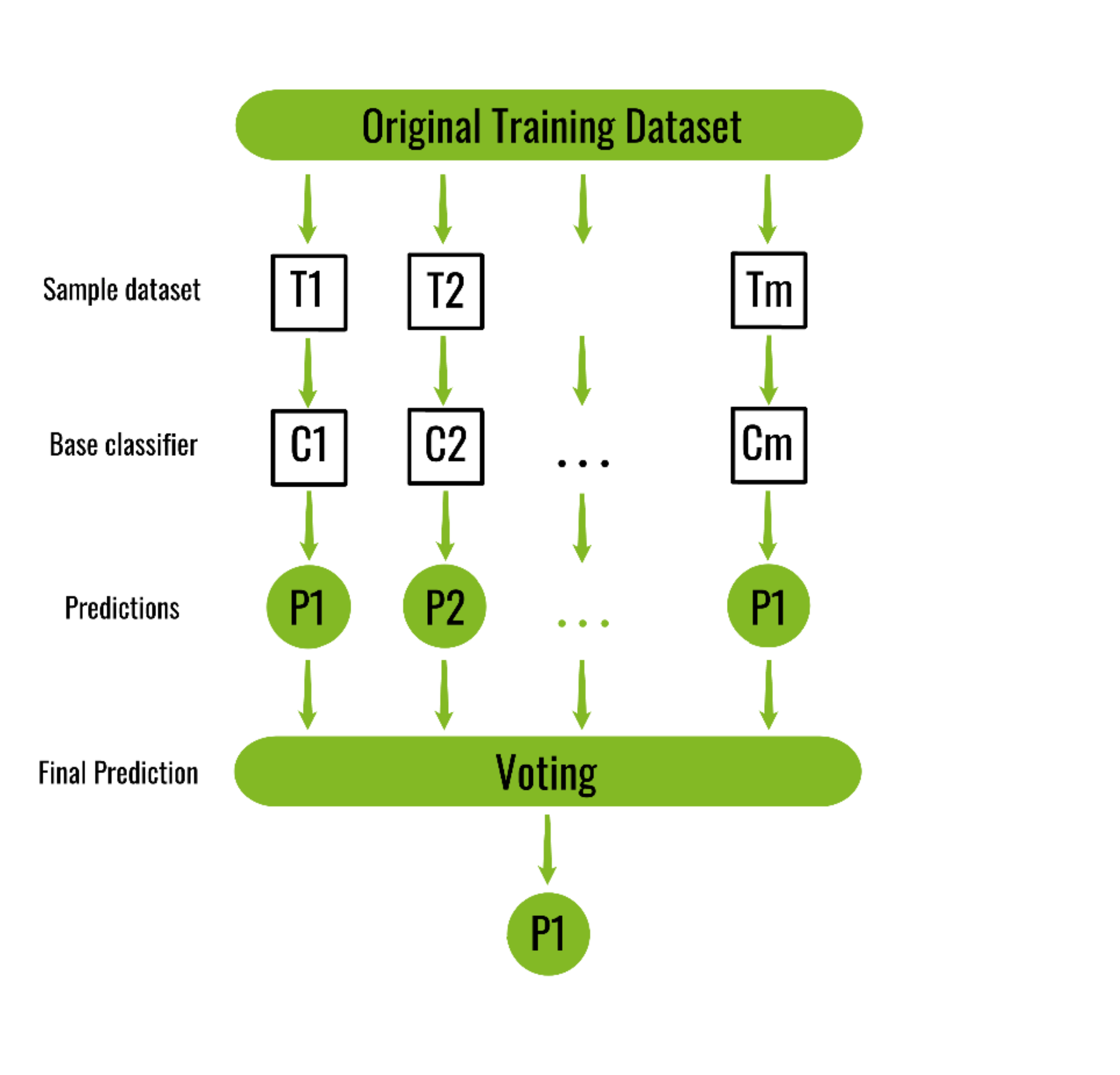

Sampling is done with a replacement on the original data set and new datasets are formed.

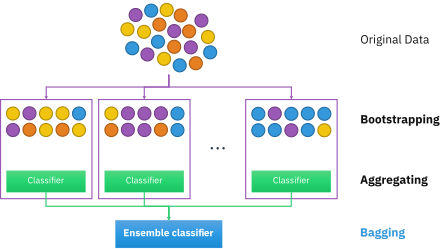

. Bagging is composed of two parts. Bagging is an acronym for Bootstrap Aggregation and is used to decrease the variance in the prediction model. In Bagging the result is obtained by averaging the responses of the N learners or majority vote.

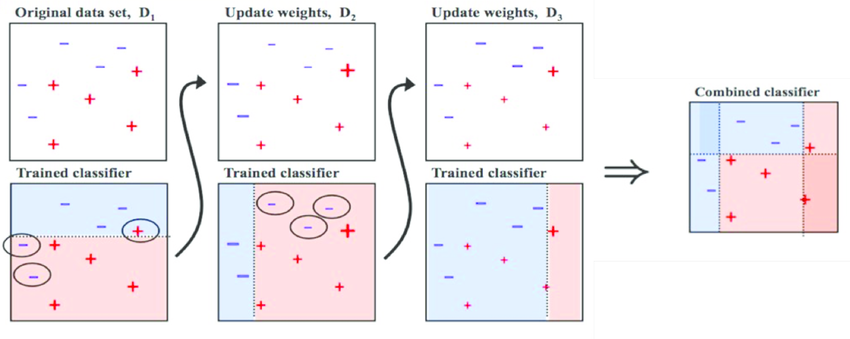

However Boosting assigns a second set of weights this time for the N classifiers in order to take a weighted average of their estimates. Bagging Bagging is used when our objective is to reduce the variance of a decision tree. Bagging is an ensemble method that can be used in regression and classification.

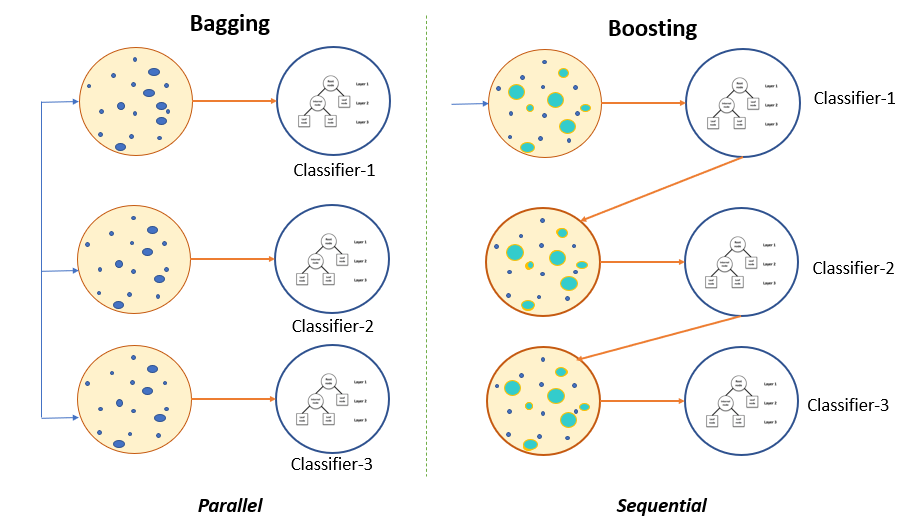

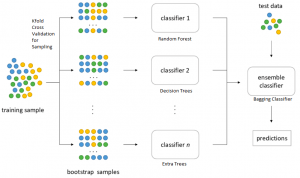

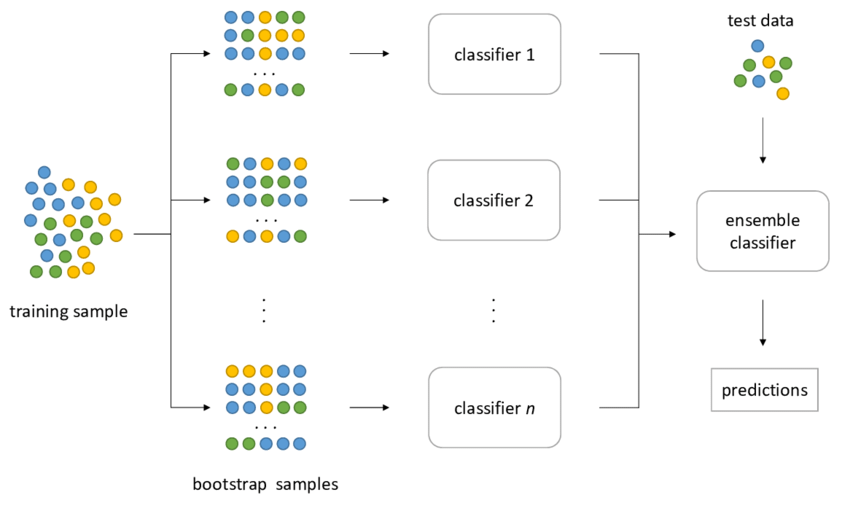

Bagging is the application of the Bootstrap procedure to a high-variance machine learning algorithm typically decision trees. Bagging is a parallel method that fits different considered learners independently from each other making it possible to train them simultaneously. It decreases the variance and helps to avoid overfitting.

A Bagging classifier is an ensemble meta-estimator that fits base classifiers each on random subsets of the original dataset and then aggregate their individual predictions either by voting or by averaging to form a final prediction. Bagging and Boosting are similar in that they are both ensemble techniques where a set of weak learners are combined to create a strong learner that obtains better performance than a single one. On each of these smaller datasets a classifier is.

Bagging is a powerful ensemble method which helps to reduce variance and by extension prevent overfitting. Main Steps involved in bagging are. It helps in reducing variance ie.

Bagging also known as bootstrap aggregating is the process in which multiple models of the same learning algorithm are trained with bootstrapped samples of the original dataset. Bagging and Boosting CS 2750 Machine Learning Administrative announcements Term projects. Bagging and boosting are the two main methods of ensemble machine learning.

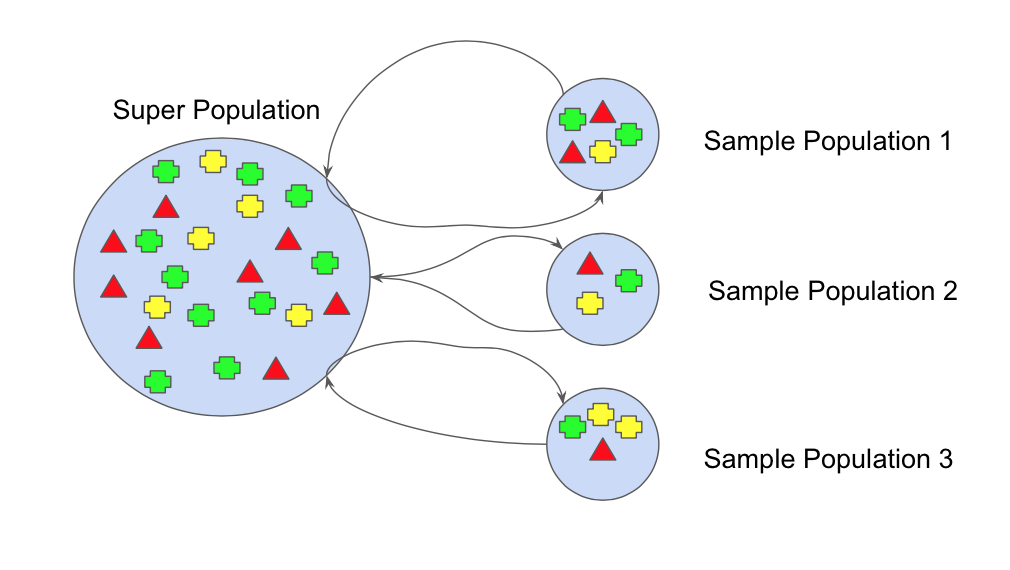

It provides stability and increases the machine learning algorithms accuracy that is used in statistical classification and regression. The first possibility of building multiple models is building the same machine learning model multiple times with the same available train data. The principle is very easy to understand instead of fitting the model on one sample of the population several models are fitted on different samples with replacement of the population.

Bagging is a powerful method to improve the performance of simple models and reduce overfitting of more complex models. Ensemble models perform best when the predictors they are made up of are very different from one another. Bagging short for bootstrap aggregating creates a dataset by sampling the training set with replacement.

Then these models are aggregated by using their. So lets start from the beginning. Ensemble methods improve model precision by using a group or ensemble of models which when combined outperform individual models.

Lets assume we have a sample dataset of 1000 instances x and we are using the CART algorithm. It also reduces variance and helps to avoid overfitting. Now each collection of subset data is used to prepare their decision trees thus we end up with an ensemble of various models.

It is also known as bootstrap aggregation which forms the two classifications of bagging. Will explain this in the next section. While in bagging the weak learners are trained in parallel using randomness in boosting the learners are trained sequentially in order to be able to perform the task of data weightingfiltering described in the previous paragraph.

All three are so-called meta-algorithms. Bagging generates additional data for training from the dataset. It is usually applied to decision tree methods.

Bagging B ootstrap A ggregating also knows as bagging is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms used in statistical classification and regression. Pasting creates a dataset by sampling the training set without replacement. The main takeaways of this post are the following.

The main hypothesis is that if we combine the weak learners the right way we can obtain more accurate andor robust. This is produced by random sampling with replacement from the original set. Here the concept is to create a few subsets of data from the training sample which is chosen randomly with replacement.

Approaches to combine several machine learning techniques into one predictive model in order to decrease the variance bagging bias boosting or improving the predictive force stacking alias ensembleEvery algorithm consists of two steps. Reports due on Wednesday April 21 2004 at 1230pm. Then like the random forest example above a vote is taken on all of the models outputs.

Ensemble learning is a machine learning paradigm where multiple models often called weak learners or base models are. Presentations on Wednesday April 21 2004 at 1230pm. Dont worry even if we are using the same training data to build the same machine learning algorithm still all the models will be different.

The biggest advantage of bagging is that multiple weak learners can work better than a single strong learner. E Bootstrap aggregating also called bagging from b ootstrap agg regat ing is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms used in statistical classification and regression. The bias-variance trade-off is a challenge we all face while training machine learning algorithms.

Boosting should not be confused with Bagging which is the other main family of ensemble methods. Bagging and Boosting are both ensemble methods in Machine Learning but whats the key behind them. What is Bagging.

In the Boosting training stage the algorithm allocates weights to each resulting model.

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Bootstrap Aggregating Wikiwand

Ensemble Learning Explained Part 1 By Vignesh Madanan Medium

Boosting And Bagging Explained With Examples By Sai Nikhilesh Kasturi The Startup Medium

Ensemble Learning Bagging Boosting Stacking And Cascading Classifiers In Machine Learning Using Sklearn And Mlextend Libraries By Saugata Paul Medium

How To Create A Bagging Ensemble Of Deep Learning Models By Nutan Medium

Bagging Bootstrap Aggregation Overview How It Works Advantages

Ensemble Learning Bagging And Boosting Explained In 3 Minutes

Bagging Classifier Python Code Example Data Analytics

Bagging In Financial Machine Learning Sequential Bootstrapping Python Example

Ensemble Learning Bagging Boosting

Bagging And Boosting Explained In Layman S Terms By Choudharyuttam Medium

Common Ensemble Learning Methods A Bagging B Boosting C Stacking Download Scientific Diagram

Boosting Machine Learning Explained For Sale Off 63

Bagging Vs Boosting In Machine Learning Geeksforgeeks

What Is Ensemble Learning Unite Ai

Ml Bagging Classifier Geeksforgeeks

What Is Bagging In Machine Learning And How To Perform Bagging